Alpit Patel

Senior Automation Consultant & CRM Architect

Alpit Chikhaliya has 4+ years of hands-on experience designing CRM-driven automation systems for healthcare organizations across the United States, United Kingdom, and UAE (Dubai). He has worked directly with 40+ clinics and multi-location healthcare groups, primarily fixing failed or underperforming automation and AI calling initiatives.

His work focuses on operational reality, not demos—where systems break under pressure, where staff behavior conflicts with CRM logic, and where compliance fails quietly if not enforced structurally.

Why AI Calling in Healthcare Breaks More Often Than It Works

Healthcare calling looks simple until you try to automate it.

Appointment confirmations, missed-call recovery, rescheduling, reminders—on paper, these are repeatable workflows. In reality, they are fragile, human-dependent processes that collapse under volume, interruptions, and inconsistent data.

Front-desk staff are constantly multitasking. Appointment statuses are updated late or skipped entirely. Providers change schedules without notice. CRMs contain half-clean data that everyone assumes is “good enough.”

This is why many AI Calling Agent for Healthcare deployments fail quietly. Not with dramatic outages—but with flat results, confused staff, and leadership eventually concluding, “AI doesn’t work for us.”

The truth is harsher:

AI calling works only when treated as an operational system, not as a communication shortcut.

This article is written from lived implementation experience—where systems broke, where assumptions failed, and where AI Calling for Clinics finally worked only after painful corrections. If you want hype, stop reading. If you want reality, continue.

What an AI Calling Agent Actually Does (and Where It Breaks First)

An AI Calling Agent for Healthcare is not an intelligent receptionist.

It is a controlled voice interface to your CRM.

It can:

- Place and receive calls

- Capture intent (confirm, reschedule, cancel, callback request)

- Log outcomes

- Trigger predefined workflows

What it cannot do:

- Fix bad data

- Enforce staff discipline

- Guess intent safely

- Compensate for broken operations

This sounds obvious. In practice, this is where most projects fail.

“We assumed front desks would update appointment statuses daily. That assumption cost us 30 days of zero improvement.”

When appointment statuses are wrong, AI calls the wrong patients.

When staff override fields casually, automation misfires.

When providers change schedules manually, rescheduling logic collapses.

The AI didn’t fail.

The operation did.

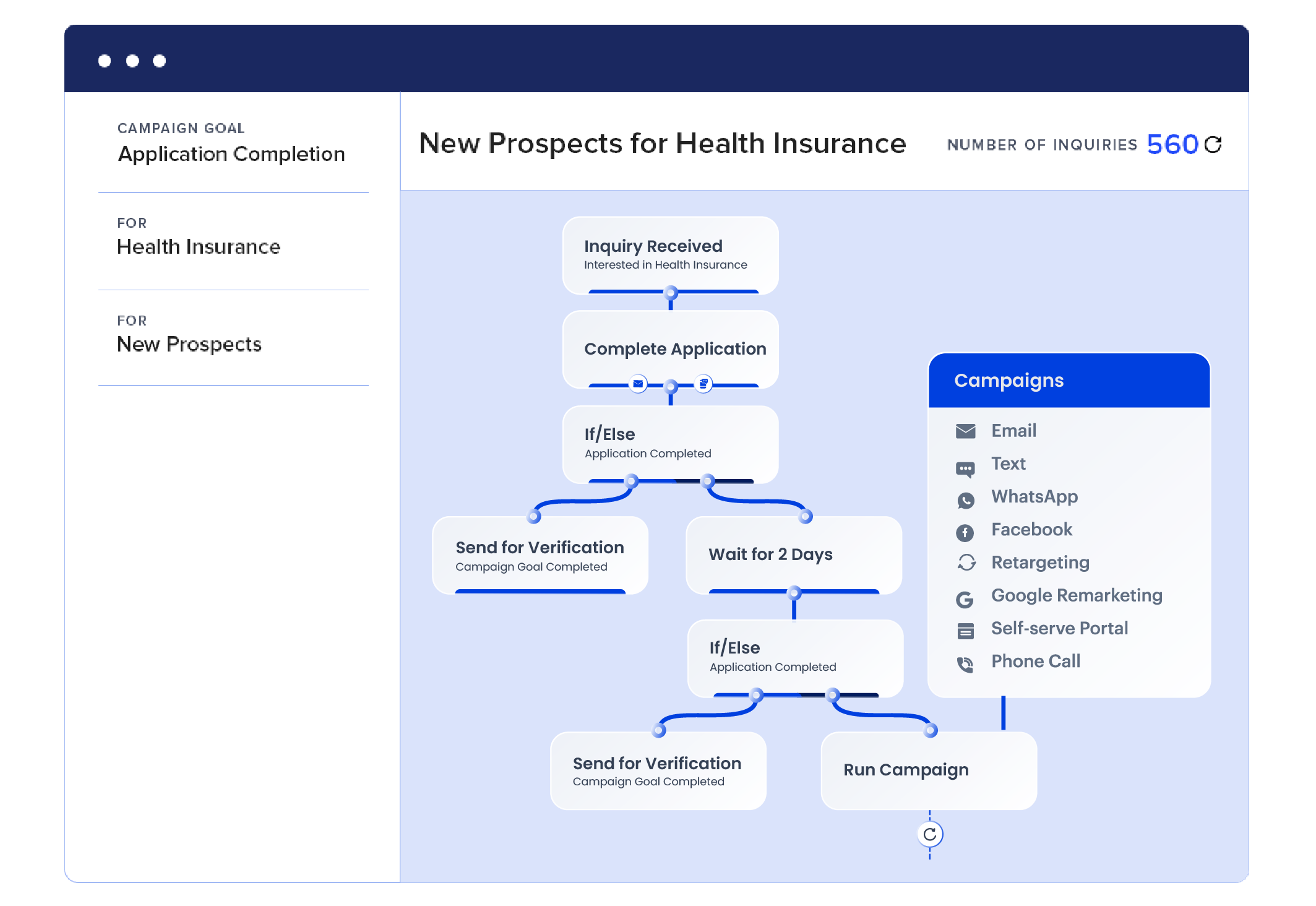

Why CRM Architecture Is Non-Negotiable

Every failed Healthcare AI Calling Services project we’ve audited shared one trait:

The CRM was treated as storage, not control.

In healthcare, the CRM must function as a decision authority:

- Who is eligible to be called

- Why they are being called

- What outcomes are allowed

- When humans must intervene

If your CRM cannot answer those questions deterministically, AI calling becomes unpredictable—and unpredictability is unacceptable in healthcare operations.

This is why healthcare CRM automation must be solved before AI calling is introduced. Automation amplifies structure. If the structure is weak, failure scales.

How Autoesta Go High Level Expert Designs CRM Logic (Uncomfortable Reality)

A Go High Level Expert Autoesta does not “set up pipelines.”

They enforce operational behavior.

Experience Friction (Real World)

“This looks clean in documentation. In real clinics, staff resist lifecycle discipline unless leadership enforces it.”

Without enforcement:

- AI confirms cancelled appointments

- Reschedules overwrite provider availability

- Follow-ups trigger on completed visits

CRM logic only works when:

- lifecycle stages are mandatory

- overrides are logged

- humans cannot silently bypass the system

Most implementations avoid this because it creates friction.

Friction is exactly why it works.

❌ What We Actively Advise Clinics NOT To Do

Authority means drawing hard lines.

We explicitly advise clinics not to do the following:

❌ Deploy AI calling without enforced lifecycle stages

❌ Allow AI to reschedule provider calendars directly

❌ Record calls by default

❌ Allow marketing teams to control healthcare automation

❌ Enable free-form AI conversations with patients

❌ Automate before CRM data cleanup is complete

If a vendor says these are “fine,” they are optimizing for speed, not safety.

How AI Calling, CRM, and Automation Actually Work Together

AI Layer (Strictly Limited)

The AI layer is allowed to:

- capture intent

- confirm availability

- escalate uncertainty

It is not allowed to:

- interpret symptoms

- give medical advice

- improvise responses

CRM Layer (Single Source of Truth)

The CRM owns:

- patient status

- consent

- escalation rules

If CRM data is wrong, AI behavior becomes unsafe.

Automation Layer (Where Most Failures Happen)

This is where things break:

- overlapping triggers

- missing exception handling

- unclear ownership

This is the core risk of healthcare workflow automation when rushed.

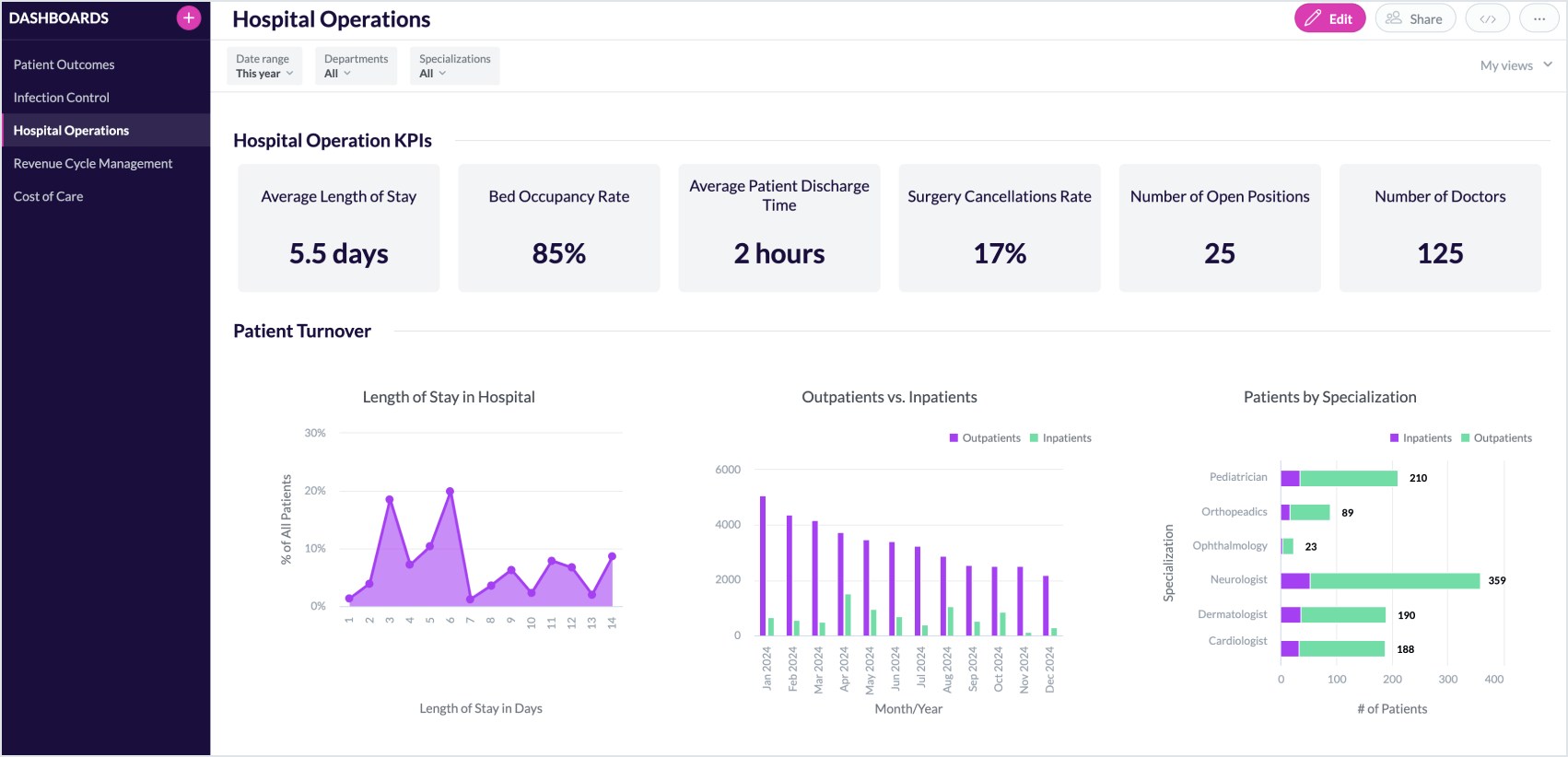

Real Case Study (Including the Failure Phase)

Multi-location outpatient clinic – United States

6 locations | ~3,200 appointments/month

Results After Stabilization

| Metric | Before | After | Change |

|---|---|---|---|

| No-show rate | 21.8% | 9.6% | ↓ 56% |

| Confirmation rate | 67% | 91% | ↑ 24% |

| Missed-call recovery | 0% | 72% | +72% |

What Most Case Studies Hide

Weeks 1–3 showed no improvement.

Why?

- staff skipped status updates

- AI called already-cancelled patients

- front desk overrode CRM fields

Only after forced CRM discipline did results appear.

Real AI Call Example (With Safeguards)

Transcript (Simplified)

AI: “Can you confirm your appointment for Thursday at 10am?”

Patient: “I need Friday instead.”

AI: “Friday at 11am is available. Confirm?”

Patient: “Yes.”

What Happened in the System

- Intent captured: reschedule

- CRM status updated

- Provider availability checked

- Confirmation workflow re-fired

- No clinical data accessed

No creativity. No guessing. No improvisation.

Compliance Is an Operational Discipline (Not a Checkbox)

Compliance Reality Table

| Risk Area | Common Mistake | What Is Enforced |

|---|---|---|

| Consent | Implied consent | Explicit CRM field |

| Call recording | Always on | Disabled by default |

| PHI | Free speech AI | Intent-limited AI |

| After-hours | Full AI access | Triage only |

| Escalation | Manual judgment | Rule-based |

This is how compliance survives audits in real clinics.

Are You Ready for AI Calling? (Reality Check)

You are not ready if:

- staff don’t update appointment status the same day

- leadership avoids enforcing CRM discipline

- patient data is fragmented across tools

- no one owns escalation decisions

If this feels harsh, AI calling will fail in your clinic.

Authority content excludes people. That’s intentional.

When AI Calling Is the Wrong Choice

For clinics under 300 appointments per month, AI calling often adds unnecessary complexity.

Manual workflows may be:

- cheaper

- safer

- easier to control

Saying this publicly increases trust—because it’s true.

Hidden Costs Clinics Don’t Expect

Executives consistently underestimate:

- staff retraining resistance

- CRM data cleanup effort

- 30–45 day stabilization phase

- early failure rates

If these costs aren’t mentioned upfront, the advisor lacks real experience.

What the AI Is Explicitly NOT Allowed to Do

The AI is never allowed to:

- diagnose conditions

- give medical advice

- modify clinical records

- speak outside approved intents

- override human decisions

Boundaries are not limitations.

They are safety.

Failure & Escalation Logic (Reality Version)

- AI confidence below threshold → human handoff

- Patient frustration detected → call terminated + staff alert

- CRM conflict detected → no action + review task

- System error mid-call → apology + staff callback

Failure is expected. Handling it defines maturity.

Timeline Reality: When Value Actually Appears

- Week 1–2: CRM cleanup (painful)

- Week 3–4: failed calls + tuning

- Week 5–6: stabilization

- Week 8+: measurable ROI

Anyone promising faster results is skipping steps.

A Neutral Word on Providers and Approaches

AI calling can be implemented:

- in-house

- via consultants

- through hybrid models

There is no single “correct” provider.

What matters is operational ownership, not branding.

How Autoesta Works (Without Sales Language)

Autoesta primarily works on:

- fixing broken CRM logic

- enforcing operational discipline

- rebuilding unsafe automation

In some cases, clients are advised not to automate yet.

That is part of responsible healthcare automation.

Frequently Asked Questions (Answered Honestly)

Is AI calling legal in healthcare?

Yes—when consent, audit trails, and strict boundaries exist.

Will patients hate AI calls?

Patients hate missed calls more than structured automation.

What if the AI makes a mistake?

It must fail safely and escalate immediately.

Does this replace staff?

No. It removes repetitive failure points.

Final Thought (No Sales Pitch)

AI calling is not a shortcut.

It is a discipline multiplier.

Weak operations collapse faster.

Strong operations scale cleanly.

Both outcomes are predictable.